Using ASIC Cards for Artificial Intelligence and Machine Learning Applications

The emergence of application-specific integrated circuits (ASIC) is mainly due to the intention of creating microchips to work for special purposes. Such technology enables specific actions to happen inside a particular device.

What is the ASIC Card?

The primary advantage of an ASIC card is the reduction in chip size in which a huge number of functional parts within a circuit are in a single chip. These units are designed from the root level on the basis of a particular application, such as chips used for memory and microprocessor interface.

An ASIC card has two primary design methods, namely the full-custom design and the gate array design.

Gate-array design

- This requires minimal design work to make a working chip. So, the non-recurring engineering costs become much lower, while the production cycles also become much shorter.

Full-custom design

- This is slightly more complex in comparison to the gate-array design. This increase in complexity only means it can do more compared to its counterpart while decreasing in size due to the level of customization and removal of unneeded gates.

The ASIC Card and AI

The development of ASICs to support artificial intelligence (AI) is increasing. One example is the Tensor Processing Units from Google. This design of a series of ASICs is for machine learning.

Fujitsu also developed the Deep Learning Unit, while Intel is likewise hinting at their development of commercial AI ASICs. That being said, ASICs can now be used to run a narrow and specific AI algorithm function, wherein chips are able to handle the workload in parallelism.

Overall, the algorithms of an AI can be accelerated faster using an ASIC chip. Nevertheless, this technology might not be around yet any time soon. That is because ASIC chip design requires substantial capital investment and requires frequent updating with the current manufacturing processes and new techniques.

ASIC Cards and Machine Learning

The revolution of ASIC technology has given designers the easiest path toward implementing highly integrated and highly standardized features inside a new system. For instance, the use of ASICs to implement machine learning in embedded systems requires interfacing with a set of peripherals and a host processor.

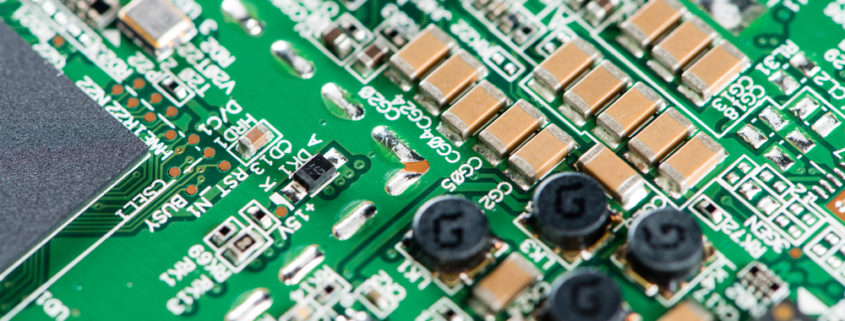

The use of ASIC card for machine learning enables printed circuit boards to work as high-speed digital systems, such as an analog front-end for wireless communication or interfacing with sensors. Thus, there are guidelines for developing these devices through high-speed PCB design guidelines. This will ensure the integrity of power, signal, and compliance with electromagnetic interference and electromagnetic interference (EMI/EMC) standards.

Stack up

- In this guideline, the board stack-up should support high-speed signaling with controlled impedance.

Routing

- This is a more general guideline in high-speed digital systems compared to specific systems with ASICs.

Analog isolation

- The high-speed section should be separated from the analog section in systems using ASIC cards with machine-learning capabilities. This will ensure analog signals will not be corrupted.

Power integrity

- In this requirement, it is important to allocate enough plane layers and space for the decoupling of capacitors. This will ensure low impedance of the power delivery network in these types of systems.

Learn about the contributions of Linear MicroSystems by clicking here.

Linear MicroSystems, Inc. is proud to offer its services worldwide as well as the surrounding areas and cities around our Headquarters in Irvine, CA: Mission Viejo, Laguna Niguel, Huntington Beach, Santa Ana, Fountain Valley, Anaheim, Orange County, Fullerton, and Los Angeles.